TL;DR

A new meta-analysis shows that automated agents (chatbots, algorithms, robots) often perform similarly to human agents in customer responses—if deployed under the right conditions. For sales teams and AI strategists, the takeaway is: align the tool with the task and stage, rather than assuming human always trumps machine.

“Automated Versus Human Agents: A Meta-Analysis of Customer Responses to Robots, Chatbots, and Algorithms and Their Contingencies”

- Authors & Affiliations: Katja Gelbrich (Catholic University Eichstätt-Ingolstadt), Holger Roschk (Aalborg University Business School), Sandra Miederer (Catholic University Eichstätt-Ingolstadt), Alina Kerath (Catholic University Eichstätt-Ingolstadt)

- Journal: Journal of Marketing (2025)

- Link: https://doi.org/10.1177/00222429251344139

Description, Key Takeaways, Methodology & Relevance

Description & Methodology:

This paper reports a meta-analysis of 943 effect sizes drawn from 327 empirical studies, examining customer responses when interacting with three types of automated agents (robots, chatbots, algorithms) compared to human agents in marketing and sales-adjacent contexts. The authors identify contingency factors (e.g., type of agent, task complexity, stage of interaction) that affect when automated agents are equivalent to human agents.

Key Takeaways:

- Customers may enter interactions with skepticism toward automated agents, but when performance and relevance are high, their behavior (choice, purchase) often aligns with interacting with a human agent.

- The three agent types (robots, chatbots, algorithms) differ in their contingencies: what works for a chatbot may not generalize to a robot or a purely algorithmic agent.

- Some factors increase the “social presence” of the agent (making it feel more human-like) while others highlight its “automated presence” (machine-identity). These dimensions matter for how customers respond.

- The implication for business: deploy automated agents when their role aligns with tasks where they can deliver utilitarian value (speed, accuracy, scale), and reserve human agents for tasks where social presence, empathy, nuance matter. The authors orient toward labor shortage relief and capacity release concerns.

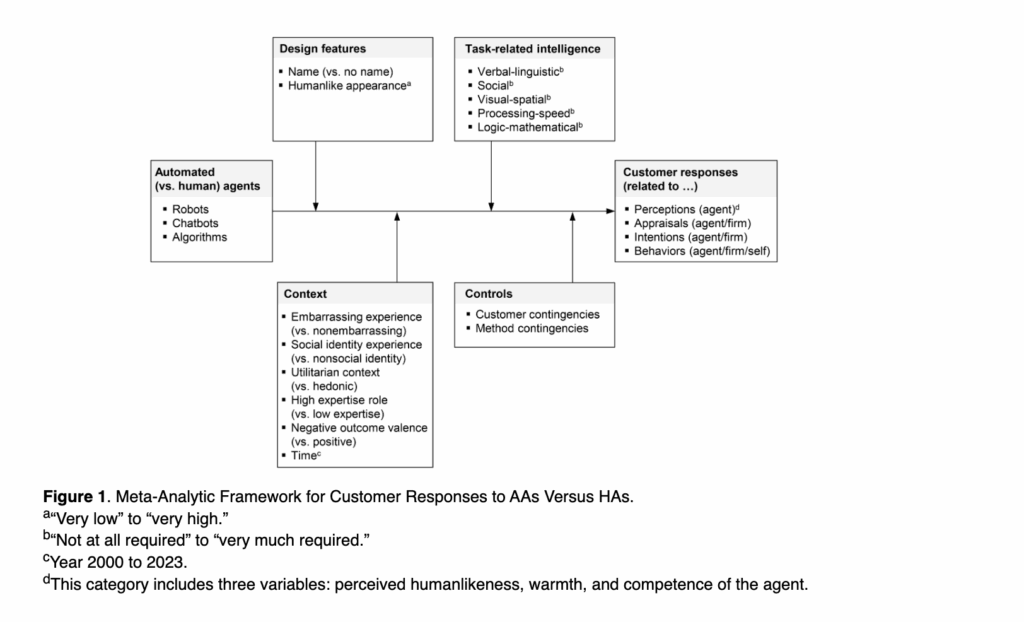

Figure 1 — Meta-Analytic Framework for Customer Responses to Automated vs. Human Agents

What It Shows

Figure 1 presents the conceptual model the authors used to structure their meta-analysis of 943 effect sizes across 327 empirical studies (2000–2023).

It maps how different types of AI agents (robots, chatbots, algorithms) influence customer responses through three main drivers:

- Design Features

- Name vs. no name

- Humanlike appearance These influence whether customers feel social connection or perceive the agent as “coldly mechanical.”

- Task-Related Intelligence

- Dimensions such as verbal-linguistic, social, visual-spatial, processing speed, and logic-mathematical skills. These determine whether the automated agent appears capable of handling the specific sales or service task.

- Contextual Factors

- Emotional situations (embarrassing vs. neutral experiences)

- Social identity relevance (e.g., whether the interaction touches on personal identity or belonging)

- Utilitarian vs. hedonic contexts (functional purchase vs. pleasure-driven)

- Expertise requirement (high vs. low)

- Outcome valence (positive vs. negative results)

- Time (how these perceptions evolved from 2000–2023)

All of these lead to customer responses, grouped into:

- Perceptions (e.g., warmth, competence, human-likeness)

- Appraisals (attitudes toward agent or firm)

- Intentions (purchase, recommendation)

- Behaviors (actual engagement or conversion)

Why It Matters

This framework shows that customers react to AI not simply because it’s a “robot” or “chatbot,” but because of how it’s designed, what kind of task it performs, and the situation it’s used in.

For sales and marketing teams, it highlights that deploying AI tools effectively requires understanding these interaction layers—especially which contexts need a “human touch.”

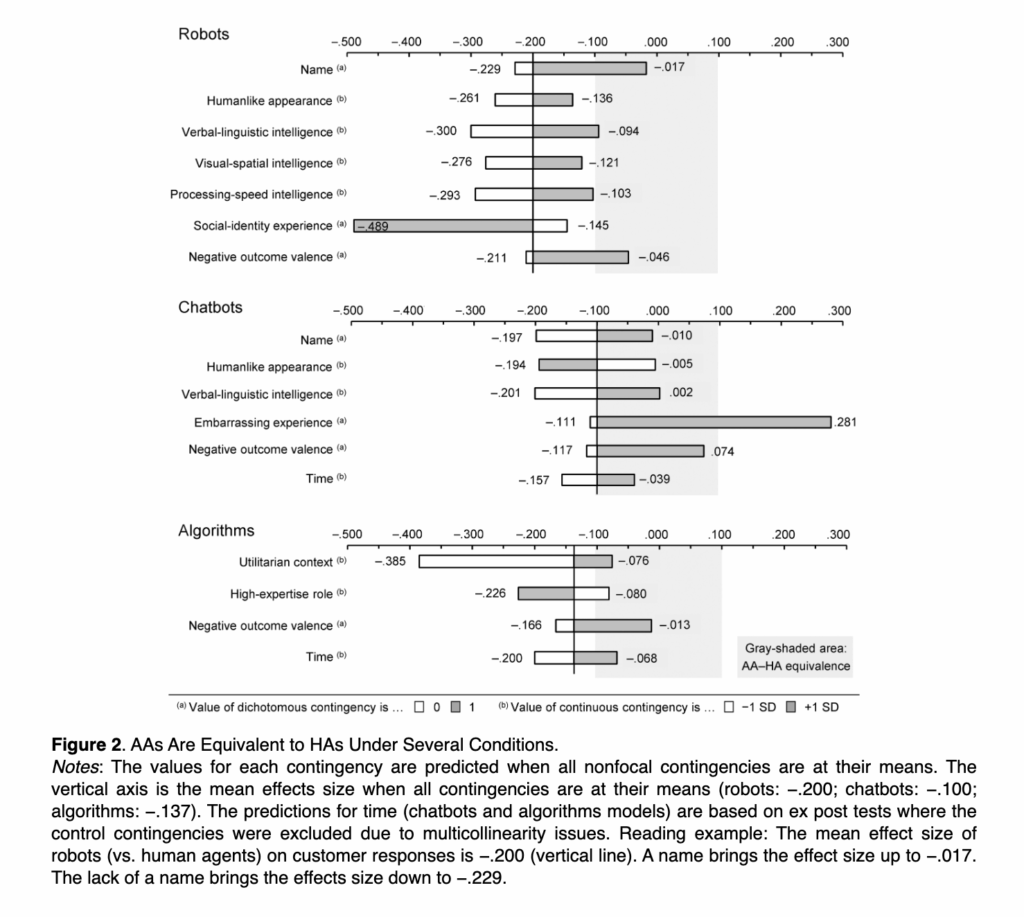

Figure 2 — When Automated Agents Equal Human Agents

What It Shows

Figure 2 quantifies how close automated agents (AAs)—robots, chatbots, or algorithms—come to matching human agents (HAs) across specific contingencies.

The gray shaded areas indicate where AAs perform equivalently to humans in terms of customer responses.

Key Findings by Category

🤖 Robots

- On average, robots underperform humans (effect size ≈ –0.20).

- Adding a name almost eliminates the gap (raising performance to –0.017).

- Robots with humanlike appearance, verbal-linguistic or visual-spatial intelligence also narrow the difference.

- However, when the context involves social-identity experiences, the gap widens (–0.489)—people prefer humans in socially sensitive situations.

- Negative outcomes (complaints, failures) also magnify the preference for humans.

💬 Chatbots

- Chatbots perform nearly on par with humans (average ≈ –0.10).

- The difference disappears (or even reverses) when customers have embarrassing experiences, such as health or financial issues (effect ≈ +0.28). → People may prefer the privacy and non-judgment of a bot.

- With negative outcomes, chatbots fare worse (–0.117) because empathy becomes important.

📊 Algorithms

- Algorithms (e.g., recommendation systems) start at a slightly worse baseline (–0.137).

- But in utilitarian contexts (functional tasks like pricing, logistics), they outperform expectations (–0.076).

- High-expertise roles and negative outcomes still favor humans.

Why It Matters

The chart shows that AI can rival—or even outperform—humans under the right design and context.

For instance:

- Use chatbots for sensitive or routine tasks where customers value privacy and efficiency.

- Use human agents for social identity or high-stakes contexts where empathy matters.

- Design robots with human cues (names, faces, language) to build trust and comfort.

This paper offers rigorous evidence on how AI (automated agents) can support or replace human efforts in sales and marketing contexts. For executives or sales leaders considering AI certification or AI-driven transformation, the insights help frame a strategy: which agents to deploy, at what stage of the sales funnel, and how to measure their effectiveness. This matters for building capability, driving revenue, and aligning AI initiatives with business value rather than novelty.

If you’re a sales leader, marketing executive, or non-technical executive looking to integrate AI into your revenue operations, the SVCH’s Certification Program for Chief AI Officer (CAIO) is tailored for you. We cover how to evaluate AI use-cases like automated agents, align them with sales funnel stages, and build governance around performance metrics and stakeholder readiness. Enroll now to turn research findings like these into actionable strategy and competitive advantage.

👉 Learn more about our CAIO certification here. https://svch.io/caio-cp/

0 Comments